20x inference acceleration for long sequence length tasks on Intel Xeon Max Series CPUs

Numenta technologies running on the Intel 4th Gen Xeon Max Series CPU enables unparalleled performance speedups for longer sequence length tasks.

Improving model accuracy comes at a price

As companies look to explore large language models (LLMs), one of the first things they must do is decide what level of accuracy is required by the model. Typically, achieving higher accuracy means using a larger model. Larger models can process more data, which means they can learn from larger and more diverse examples. The smaller the model, the harder it is to recognize patterns and understand context.

However, increasing the size of the model often leads to taking a performance hit, due to the increased computational requirements, longer training times, and potential overfitting to the training data. Companies are left to figure out how to find the right balance between model accuracy and speed.

Higher accuracies at the same compute costs

With our neuroscience-based optimization techniques, we shift the model accuracy scaling laws such that at a fixed cost, or a given performance level, our models achieve higher accuracies than their standard counterparts. As a result, models that are significantly larger can be run as quickly and cost-effectively as smaller models. This allows you to get both accuracy and performance speed-ups, and you can decide what range works best for your specific problem. If a small accuracy improvement is all that’s needed, you can get significant speed-ups. If accuracy matters most, you can maximize accuracy while still getting a bit of a performance bump.

No accuracy compromise: 3-60x performance speedups and better accuracy

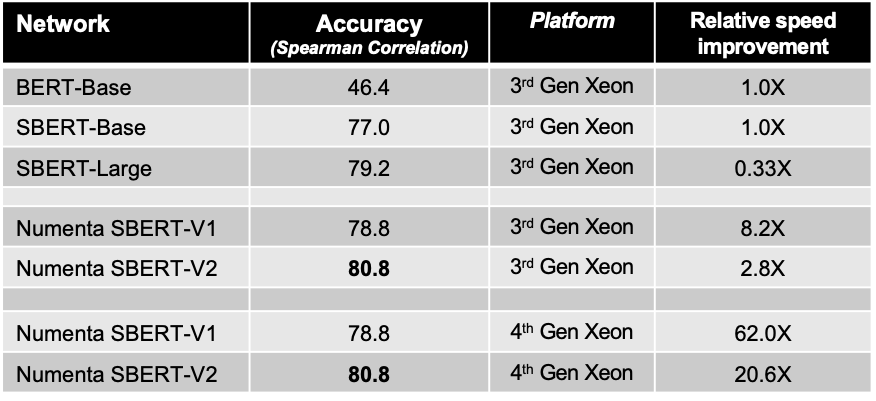

This table shows results for standard and Numenta optimized models on the Semantic Sentence Similarity Benchmark (STSB), a popular NLP tool that measures how effectively models capture nuanced, semantic similarities between sentence pairs.

The first few rows in the table are standard models. Given that BERT is designed to look at the context of words in a sentence while SBERT measures how similar two sentences are, SBERT models have much better accuracy on this benchmark. In this case, SBERT-Base gets a score of 77.0, which is dramatically higher than the BERT-Base score of 46.4. Since their model architecture is the same, there is no speed improvement. The SBERT-Large model is 3 times as big, which gives it an even higher accuracy score of 79.2, but makes it 3 times slower than the Base models.

Now if we look at the Numenta models running on Intel’s 3rd Gen Xeon, we see that the first model offers higher accuracy than SBERT-Base and an 8x speedup. The second Numenta model offers even higher accuracy than SBERT-Large, but in this case, rather than running 3 times slower than BERT-Base, it runs 3 times faster. When our models run on Intel’s 4th Gen Xeon, the speed-ups are even more dramatic, at 20-60x faster.

* The above BERT-Base and SBERT-Base accuracy baselines were sourced from the paper “Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks” by Reimers and Gurevych. To facilitate comparison, our BERT models were pre-trained on the same dataset. Higher accuracies on STSB can be obtained by using different model architectures and more extensive pre-training regimes.

Achieving an ideal balance between accuracy and performance

For many companies deploying LLMs, choosing the size of the model is dictated by certain requirements, whether it’s latency or cost. By shifting the model accuracy curve, our AI platform allows you to run larger models as quickly and cost-effectively as smaller, unoptimized models. This means you can:

Numenta technologies running on the Intel 4th Gen Xeon Max Series CPU enables unparalleled performance speedups for longer sequence length tasks.

Numenta technologies combined with the new Advanced Matrix Extensions (Intel AMX) in the 4th Gen Intel Xeon Scalable processors yield breakthrough results.

Numenta Transformer models significantly accelerate CPU inference while maintaining competitive accuracy.