This month marked an exciting milestone for Numenta. On Monday (Sep 11), at the Efficient Generative AI Summit, our CEO Subutai Ahmad delivered the keynote and unveiled our first commercial AI product built on The Thousand Brains Theory of Intelligence – the Numenta Platform for Intelligent Computing (NuPIC). Built on decades of neuroscience research, NuPIC allows large language models (LLMs) to run efficiently on CPUs, providing the best price-performance for AI.

The Challenge: Navigating the AI Landscape

“If you look at so many different industries, whether it’s healthcare or medical, finance, or education, Generative AI and ChatGPT-like applications are turning the business world inside out. Then if you look underneath at what’s going on, there are significant issues in deploying these applications in production in enterprises,” Subutai explained to the audience.

The issues Subutai mentioned, which most enterprises face when deploying LLMs in production, include significant compute costs, inefficiencies, and GPU-related infrastructure complexities. There are also issues with model stability, potential hallucinations in answers, data privacy and general confusion in the industry.

The Solution: NuPIC Delivers Powerful Inference Performance

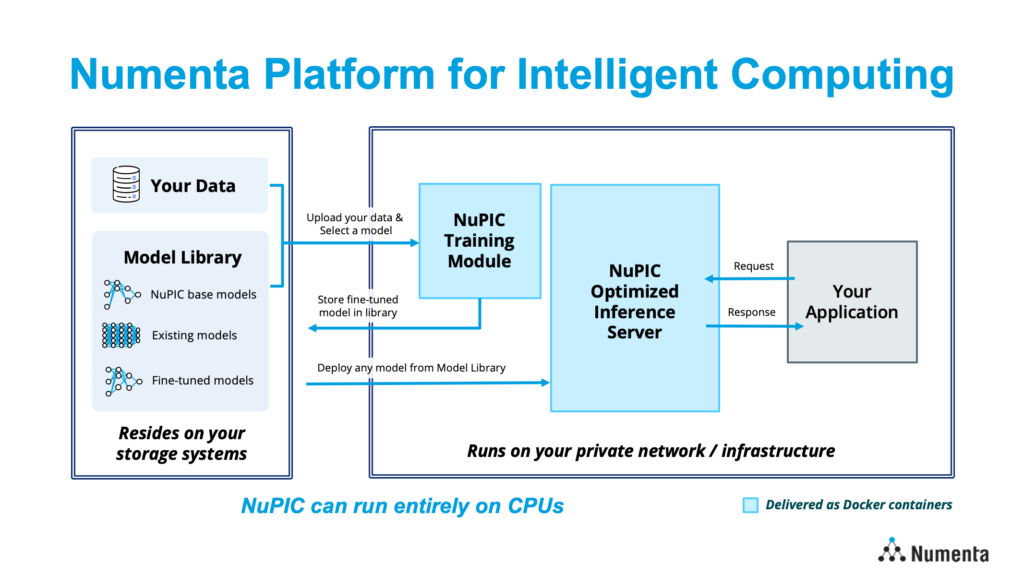

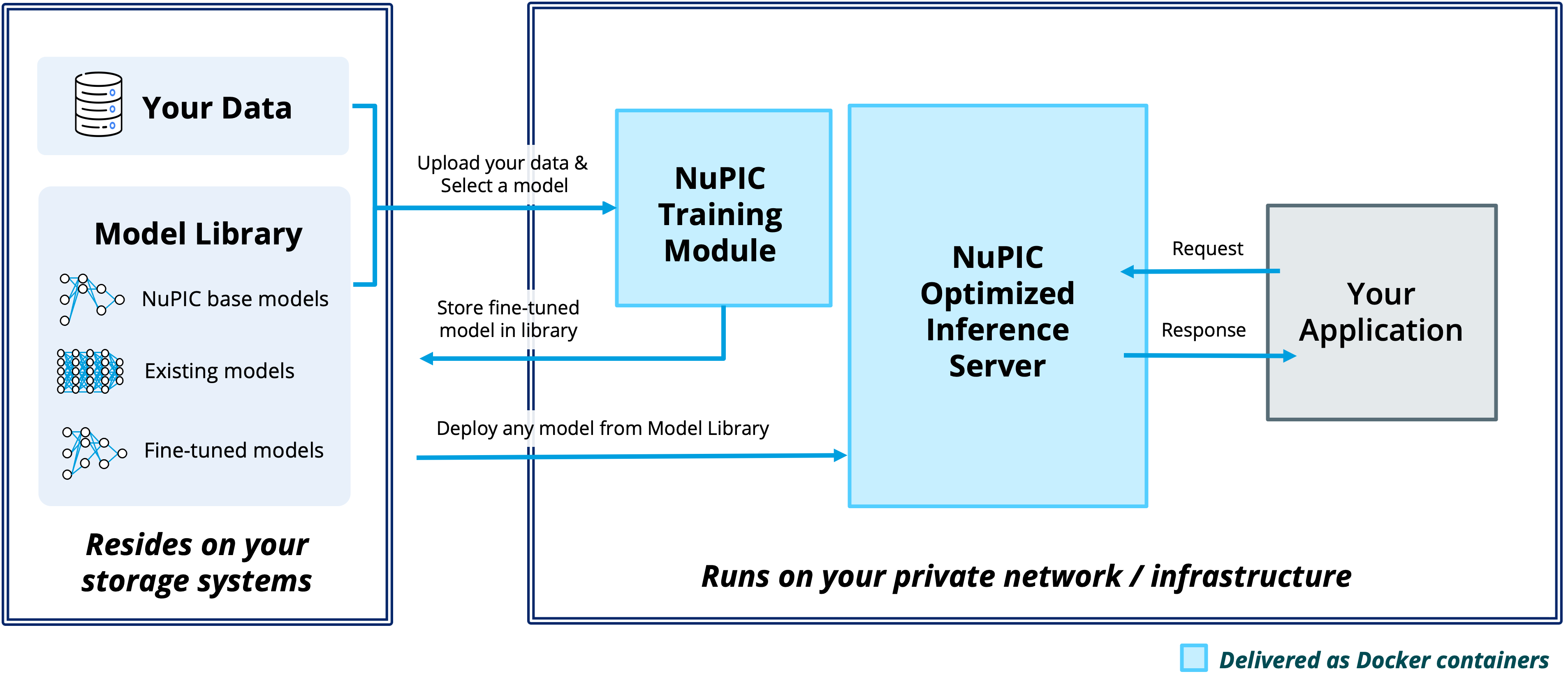

Instead of using bigger models, more data, more chips and throwing more brute-force energy at the problem, we think there has to be a better way. That’s why we built the Numenta Platform for Intelligent Computing (NuPIC). NuPIC is an AI software platform that fills the gap between the compute power required for deep learning and what today’s hardware systems can provide. It applies decades of neuroscience research to AI to provide significant performance improvements to large language models and transformer models — all running completely on CPUs.

There are three components to NuPIC:

- NuPIC Inference Server: At the heart of NuPIC is our cutting-edge inference server, which allows you to run predictions at lightning speeds without compromising on accuracy. Designed for both on-premise and cloud deployments, it ensures that your AI solutions are scalable and robust. You can deploy your fine-tuned model, an optimized NuPIC model or bring your own.

- NuPIC Training Module: Whether it’s a niche dataset or a specific application you have in mind, our training module allows you to tailor pre-trained models to your unique business requirements and get an additional boost in accuracy.

- NuPIC Model Library: Our flexible model library makes it easy to choose the right tool for the right job. We offer a range of production-ready models optimized for various applications and performance requirements. From sentiment analysis to text summarization, these models are ready to use, saving you time and computational resources.

The Benefits: No GPUs Required

NuPIC today is ideal for natural language processing applications like sentiment analysis, sentence similarity, and text summarization. Some of the many benefits include:

- Runs different AI workloads and models efficiently on CPUs — High-quality shouldn’t always mean high cost. With NuPIC, you can serve both generative and non-generative models on a single CPU server, while experiencing consistently high throughput and low latency in inference. CPUs give you the flexibility to scale with ease, eliminating the need for complex, expensive, and hard-to-obtain GPU infrastructures.

- Provides uncompromised data privacy, security, and control — Data privacy is becoming more important than ever. With that in mind, NuPIC is designed to run entirely within your infrastructure, either on-premise, or via private cloud on any major cloud provider. You are in complete control over your data and models, and internal data never leaves company walls, ensuring consistent, reliable behavior, and enhanced data compliance.

- Allows you to quickly prototype LLM-based solutions, then deploy at scale with no deep learning expertise — NuPIC is backed by a dedicated team of AI experts that can help simplify the complexities of deploying LLMs in production and provide a seamless experience. Delivered as a Docker container, you can rapidly prototype, iterate, and scale your AI solutions using standard MLOps tools and processes.

NuPIC in Action: Finding the Right Balance

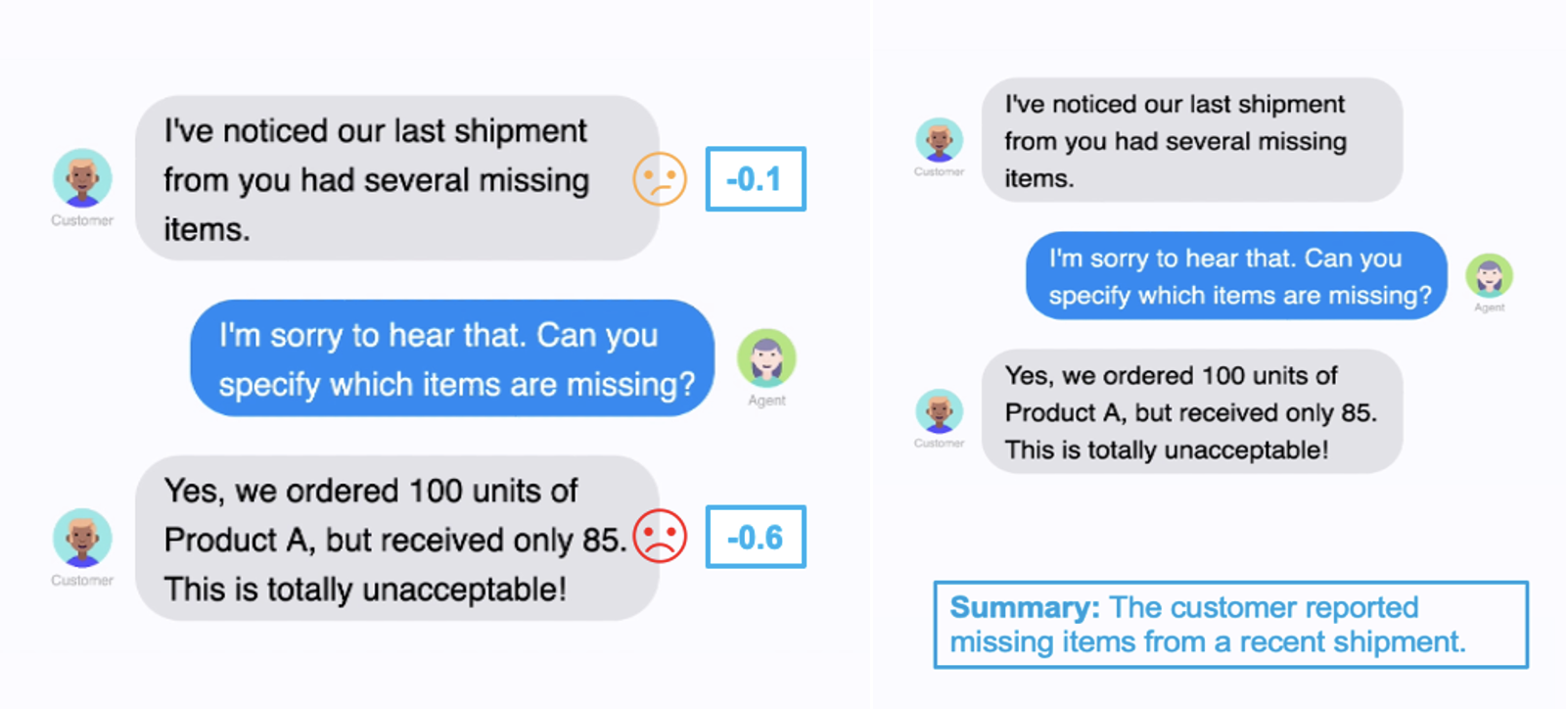

In the keynote, Subutai provided examples of how NuPIC’s first customers are using the platform. Imagine a company drowning in endless customer inquiries. While the allure of generative AI models like GPT is strong, they come with potential pitfalls, such as steep costs or incorrect responses. Instead, Subutai pointed out the value of non-generative LLMs, like BERT, which excel in understanding and categorizing text, to automate responses efficiently.

The crux of the message was not to get swayed solely by the hype surrounding generative AI. To truly harness the potential of AI, businesses should consider a mix-and-match approach, and use the right model for the job. We often find that marrying GPT’s generative capabilities with BERT’s analytical strength offers a balanced and efficient AI solution. This approach promises faster, well-informed responses, enhancing customer satisfaction and quicker issue resolution.

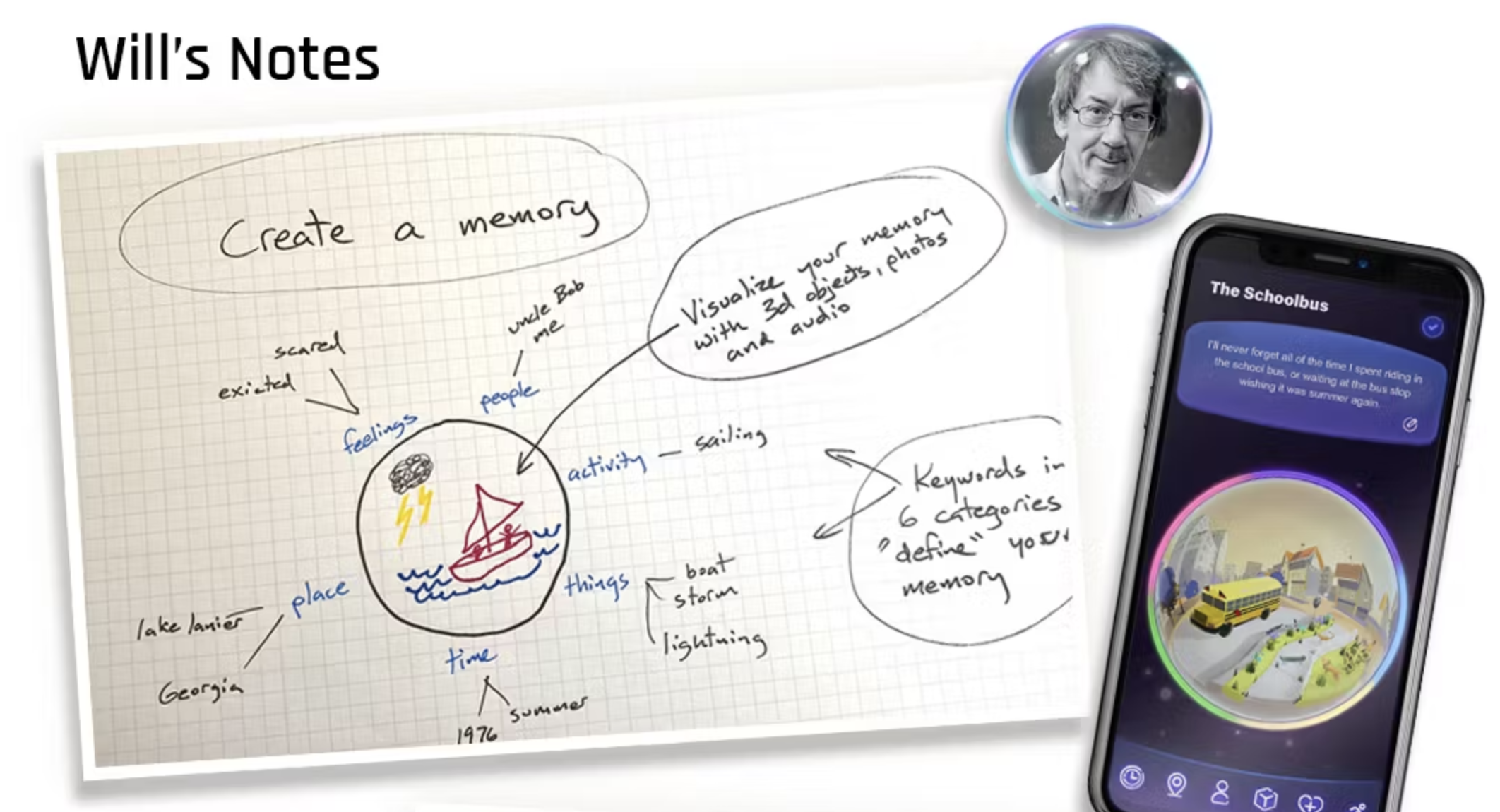

Moreover, NuPIC lets you run both non-generative and generative models simultaneously on a single CPU server, allowing you to leverage the best of both worlds without any technical bottlenecks. Gallium Studios, for example, is harnessing the power of NuPIC for its latest AI simulation game, Proxi. Proxi allows users to uniquely personalize their gameplay based on their memories and interactions with the game.

“With NuPIC, we can run LLMs with incredible performance on CPUs and use both generative and non-generative models as needed. And, because everything is on-prem, we have full control of models and data,” writes Lauren Elliot, CEO of Gallium Studios.

New Era of Intelligent Computing

This new era of neuroscience-driven AI is just the beginning. As the AI landscape and our neuroscience research continue to evolve, so will NuPIC. We have a robust roadmap based on our neuroscience discoveries. Our commitment is to remain at the forefront of AI advancements, always bringing you the best tools, features, and experiences.

If you’d like to stay informed about product updates, new features, or any NuPIC-related news sign up here to join our NuPIC mailing list.

If you’re interested in exploring how your company can benefit from NuPIC, you can request a demo here.