This article originally appeared on Fortune.com.

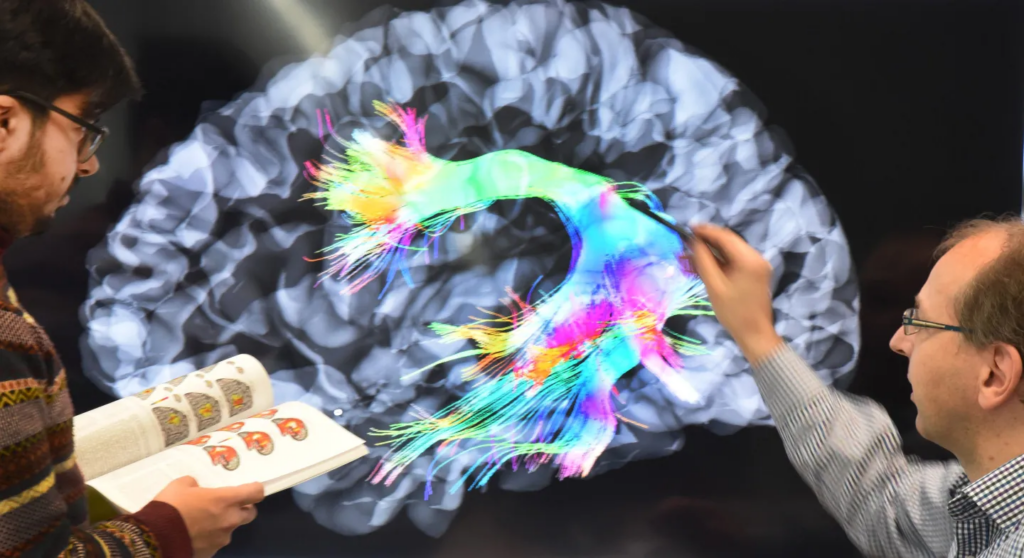

With all the hype surrounding ChatGPT, most people are giddy with the promise of artificial intelligence, yet they are overlooking its pitfalls. If we want to have genuinely intelligent machines that understand their environments, learn continuously, and help us every day, we need to apply neuroscience to deep-learning A.I. models. Yet with a few exceptions, the two disciplines have remained surprisingly isolated for decades.

That wasn’t always the case. In the 1930s, Donald Hebb and others came up with theories of how neurons learn, inspiring the first deep-learning models. Then in the 1950s and ‘60s, David Hubel and Torsten Wiesel won the Nobel Prize for understanding how the brain’s perceptual system works. That had a big impact on convolutional neural networks, which are a big part of A.I. deep learning today.

The brain’s superpowers

While neuroscience as a field has exploded over the last 20 to 30 years, almost none of these more recent breakthroughs are evident in today’s A.I. systems. If you ask average A.I. professionals today, they’re unaware of these advances and don’t understand how recent neuroscience breakthroughs can have any impact on A.I. That must change if we want A.I. systems that can push the boundaries of science and knowledge.

For example, we now know there’s a common circuit in our brain that can be used as a template for A.I.

The human brain consumes about 20 watts of power for an average adult, or less than half the consumption of a light bulb. In January, ChatGPT consumed roughly as much electricity as 175,000 people. Given ChatGPT’s meteoric rise in adoption, it is now consuming as much electricity per month as 1,000,000 people. A paper from the University of Massachusetts Amherst states that “training a single A.I. model can emit as much carbon as five cars in their lifetimes.” Yet, this analysis pertained to only one training run. When the model is improved by training repeatedly, the energy use is vastly greater.

In addition to energy consumption, the computational resources needed to train these A.I. systems have been doubling every 3.4 months since 2012. Today, with the incredible rise in A.I. usage, it is estimated that inference costs (and power usage) are at least 10 times higher than training costs. It’s completely unsustainable.

The brain not only uses a tiny fraction of the energy used by large A.I. models, but it is also “truly” intelligent. Unlike A.I. systems, the brain can understand the structure of its environment to make complex predictions and carry out intelligent actions. And unlike A.I. models, humans learn continuously and incrementally. Conversely, code doesn’t yet truly “learn.” If an A.I. model makes a mistake today, then it will continue to repeat that mistake until it is retrained using fresh data.

How neuroscience can turbocharge A.I. performance

Despite the escalating need for cross-disciplinary collaboration, cultural differences between neuroscientists and A.I. practitioners make communication difficult. In neuroscience, experiments require a tremendous amount of detail and each finding can take two to three years’ worth of painstaking recordings, measurements, and analysis. When research papers are published, the detail often comes across as gobbledygook to A.I. professionals and computer scientists.

How can we bridge this gap? First, neuroscientists need to step back and explain their concepts from a big-picture standpoint, so their findings make sense to A.I. professionals. Second, we need more researchers with hybrid A.I.-neuroscience roles to help fill the gap between the two fields. Through interdisciplinary collaboration, A.I. researchers can gain a better understanding of how neuroscientific findings can be translated into brain-inspired A.I.

Recent breakthroughs prove that applying brain-based principles to large language models can increase efficiency and sustainability by orders of magnitude. In practice, this means mapping neuroscience-based logic to the algorithms, data structures, and architectures running the A.I. model so that it can learn quickly on very little training data, just like our brains.

Several organizations are making progress in applying brain-based principles to A.I., including government agencies, academic researchers, Intel, Google DeepMind, and small companies like Cortical.io (Cortical uses Numenta’s technology, and Numenta owns some in Cortical as part of our licensing agreement). This work is essential if we are to expand A.I. efforts while simultaneously protecting the climate as deep learning systems today move toward ever-larger models.

From the smallpox vaccine to the light bulb, almost all of humanity’s greatest breakthroughs have come from multiple contributions and interdisciplinary collaboration. That must happen with A.I. and neuroscience as well.

We need a future where A.I. systems are capable of truly interacting with scientists, helping them create and run experiments that push the boundaries of human knowledge. We need A.I. systems that genuinely enhance human capabilities, learning alongside all of us and helping us in all aspects of our lives.

Whether we like it or not, A.I. is here. We must make it sustainable and efficient by bridging the neuroscience-A.I. gap. Only then can we apply the right interdisciplinary research and commercialization, education, policies, and practices to A.I. so it can be used to improve the human condition.