The rise of ChatGPT has underscored the significance of Transformers and Large Language Models (LLMs) in AI. Yet despite the undeniable benefits they offer, deploying LLMs in production is anything but straightforward. They can be costly to deploy, hard to manage, and challenging to scale – not to mention the data privacy and security issues they introduce. Almost every customer we talk to today has questions about LLMs. In this blog post, we’ll share the 5 most common questions we receive and walk through how we address each one.

Q: How can I bring generative AI capabilities in-house?

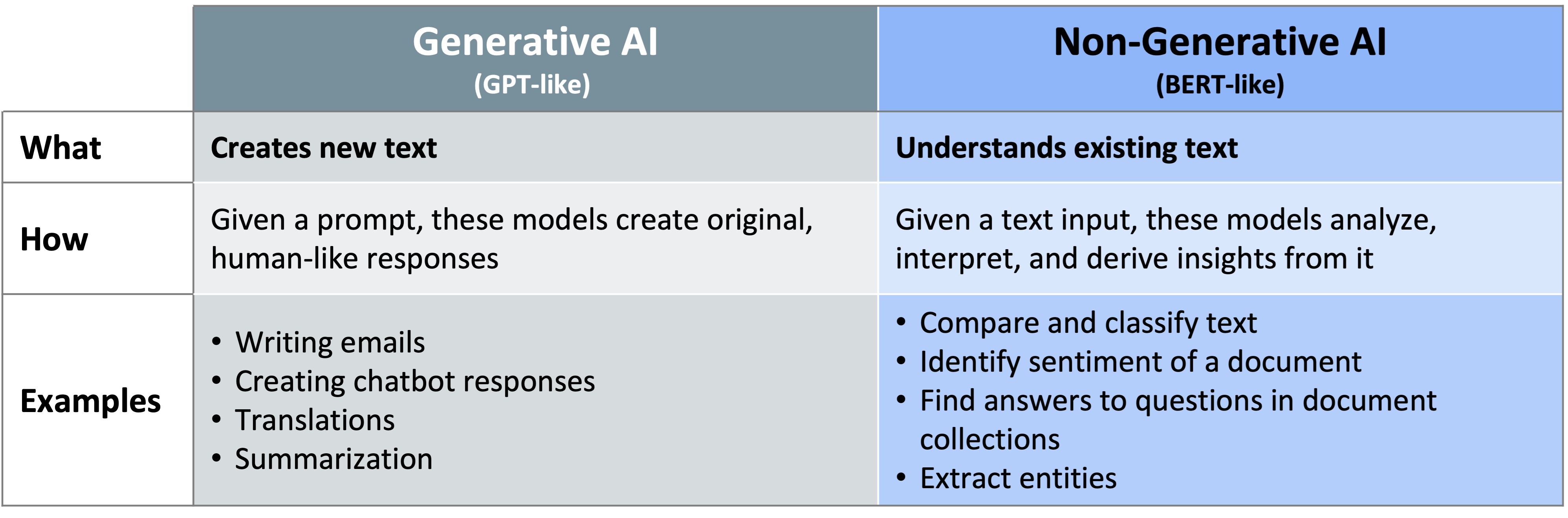

Because of the hyper-focus on generative AI and ChatGPT, many companies are looking at GPT models as the de facto starting point for what they want to do. In reality, most use cases today do not require a generative model. They can be solved much more efficiently using non-generative, BERT-like models. To properly position the tradeoffs, you want to start by looking at the business problem you’re trying to solve. Does it require the creation of new text? Do you need to generate plausible, human-like responses that can work for a variety of prompts? If so, then you may need a generative model. But if you’re looking for answers within a given text, and want to avoid the possibility of hallucinating or giving unsafe and unpredictable answers, then a non-generative model may work best.

If you do need generative AI models, don’t assume that you need a behemoth 100B+ parameter GPT model to solve your problem. We are seeing that smaller, specialized models offer several advantages. In many cases, fine-tuning a small model on the order of 100M – 600M parameters performs better than in-context learning using multi-billion parameter GPT models.

Q: How do I pick the best model for my use case?

With the number of models available today, it can be overwhelming to know which one to use. There are many different variables to consider that will help shape your decision. One primary consideration might be how accurate the model is in providing the right results. Different models provide different levels of accuracy, and at some level, the incremental benefits might provide diminishing returns.

However, picking a model is rarely as simple as opting for one with the highest accuracy. Other factors come into play, such as how quickly the model can produce results. For instance, if you’re running a time-sensitive application, speed may matter more than accuracy.

Additionally, you’ll want to weigh both speed and accuracy against cost. More often than not, your budget will help dictate your choice of model. Factors such as computational resource requirements, maintenance, regulatory or compliance issues, and data privacy might also influence your decision.

Ultimately, choosing the right model is a balancing act that requires looking at the whole picture.

Q: What are some of the more common use cases Numenta has seen?

The use case we see most when we talk to customers is document understanding, which is the ability to interpret the content, structure and context within large volumes of text documents. Document understanding requires LLMs that have been trained on massive amounts of data to be able to turn unstructured data into structured data that can then be analyzed, organized and categorized in meaningful ways. Examples vary by industry. Contract analysis is a popular example that involves using LLMs to analyze legal contracts, extract relevant information, accelerate the review process, and make contracts more searchable.

Another use case we see often is sentiment analysis, which is the ability to understand customer attitudes, opinions and emotions expressed in a piece of text. This is achieved using LLMs to classify text based on sentiment. The text may come from social media posts, reviews, emails, or customer chats. In these examples, LLMs need to be able to understand nuanced emotions, recognize irony and even detect sarcasm. Sentiment analysis is crucial for anyone looking to provide more sophisticated customer support systems – ones that can recognize complex queries, understand how customers are feeling, and provide personalized responses.

Lastly, we’ve seen a surge of interest in Generative AI use cases, which include things like real-time translation, document summarization, generating intelligent code or providing human-like responses in conversational AI chatbots.

Q: What is Numenta’s neuroscience-based approach and why do we think it’s optimal?

Our technology is based upon decades of neuroscience research, which our co-founder Jeff Hawkins described in his book A Thousand Brains. Understanding the key principles that make the brain the most efficient example of an intelligent machine provides us with a unique perspective to tackle the AI challenges we face today.

We have developed an AI platform that leverages our neuroscience findings which unlocked never seen before performance improvements for LLMs on CPUs. Ultimately, as we continue to incorporate more neuroscience principles into our technology, it will unlock intelligent capabilities not possible today such as continuous learning and generalization. The substantial advancements we’ve made, backed by our rich history of neuroscience research, create a long-term roadmap towards intelligent computing.

Q: How can I get started?

To get started with our AI platform, simply reach out to us for a demo request.

With our AI platform, customers experience 10-100x speedup in inference on CPUs, which translates to dramatic cost reductions, energy efficiency, and improved productivity. You can visit our Case Study page to learn more.

Another key feature of our platform is its ability to operate entirely within your existing infrastructure. This means all your models and data always remain private and fully under your control. Furthermore, our platform is cloud-agnostic and can run on any cloud provider or on-premise. It can be easily integrated into your existing MLOps tools as a lightweight containerized solution. This allows you to run our platform on your current setup without significant overhauls or disruptions to your existing infrastructure and operations.

If you’re interested in seeing how our AI Platform can help you simplify the scalable deployment of Large Language Models, contact us to request a demo.